Are you looking for an answer to the topic “What is Fleiss kappa used for?“? We answer all your questions at the website Ecurrencythailand.com in category: +15 Marketing Blog Post Ideas And Topics For You. You will find the answer right below.

Introduction. Fleiss’ kappa, κ (Fleiss, 1971; Fleiss et al., 2003), is a measure of inter-rater agreement used to determine the level of agreement between two or more raters (also known as “judges” or “observers”) when the method of assessment, known as the response variable, is measured on a categorical scale.Fleiss’ kappa (named after Joseph L. Fleiss) is a statistical measure for assessing the reliability of agreement between a fixed number of raters when assigning categorical ratings to a number of items or classifying items.Cohen’s kappa is a metric often used to assess the agreement between two raters. It can also be used to assess the performance of a classification model.

What is the Fleiss kappa coefficient?

Fleiss’ kappa (named after Joseph L. Fleiss) is a statistical measure for assessing the reliability of agreement between a fixed number of raters when assigning categorical ratings to a number of items or classifying items.

What is Cohen’s kappa used for?

Cohen’s kappa is a metric often used to assess the agreement between two raters. It can also be used to assess the performance of a classification model.

Fleiss Kappa [Simply Explained]

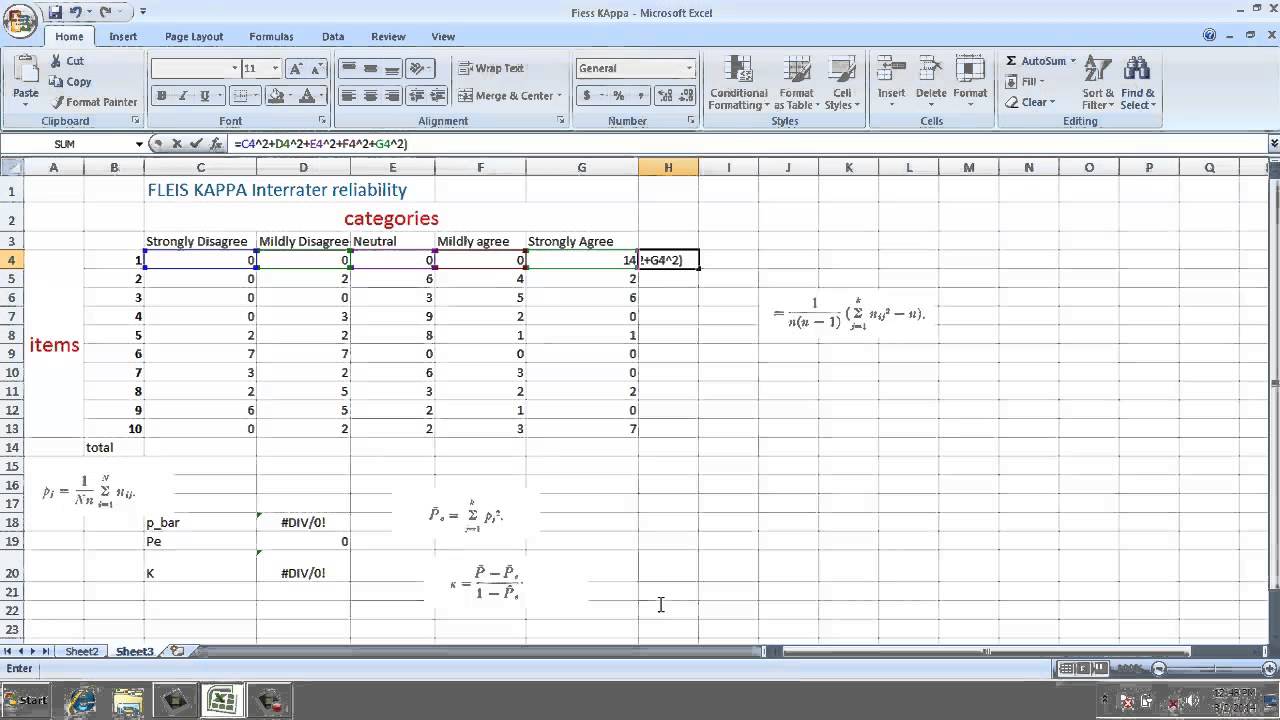

Images related to the topicFleiss Kappa [Simply Explained]

![Fleiss Kappa [Simply Explained]](https://i.ytimg.com/vi/ga-bamq7Qcs/maxresdefault.jpg)

Is Fleiss kappa weighted?

This extension is called Fleiss’ kappa. As for Cohen’s kappa no weighting is used and the categories are considered to be unordered. Let n = the number of subjects, k = the number of evaluation categories and m = the number of judges for each subject. E.g. for Example 1 of Cohen’s Kappa, n = 50, k = 3 and m = 2.

Is Kappa a measure of reliability?

The Kappa Statistic or Cohen’s* Kappa is a statistical measure of inter-rater reliability for categorical variables. In fact, it’s almost synonymous with inter-rater reliability. Kappa is used when two raters both apply a criterion based on a tool to assess whether or not some condition occurs.

What is a good Fleiss kappa?

This is the proportion of agreement over and above chance agreement.

…

Interpreting the results from a Fleiss’ kappa analysis.

| Value of κ | Strength of agreement |

|---|---|

| < 0.20 | Poor |

| 0.21-0.40 | Fair |

| 0.41-0.60 | Moderate |

| 0.61-0.80 | Good |

How do I download Fleiss kappa in SPSS?

- Open IBM SPSS Statistics.

- Navigate to Utilities -> Extension Bundles -> Download and Install Extension Bundles.

- Search for the name of the extension and click Ok. Your extension will be available.

How is Fleiss kappa calculated?

The actual formula used to calculate this value in cell C18 is: Fleiss’ Kappa = (0.37802 – 0.2128) / (1 – 0.2128) = 0.2099.

See some more details on the topic What is Fleiss kappa used for? here:

Fleiss’ kappa – Wikipedia

Fleiss’ kappa is a statistical measure for assessing the reliability of agreement between a fixed number of raters when assigning categorical ratings to a …

Fleiss’ Kappa – Statistics How To

Fleiss’ Kappa is a way to measure agreement between three or more raters. It is recommended when you have Likert scale data or other closed- …

Fleiss’ Kappa | Real Statistics Using Excel

Fleiss’ Kappa … Cohen’s kappa is a measure of the agreement between two raters, where agreement due to chance is factored out. We now extend Cohen’s kappa to …

Fleiss’ Kappa in R: For Multiple Categorical Variables

The Fleiss kappa is an inter-rater agreement measure that extends the Cohen’s Kappa for evaluating the level of agreement between two or more raters, …

How do you use Kappa?

Kappa is widely used on Twitch in chats to signal you are being sarcastic or ironic, are trolling, or otherwise playing around with someone. It is usually typed at the end of a string of text, but, as can often the case on Twitch, it is also often used on its own or repeatedly (to spam someone).

What is Kappa in naive Bayes?

The Kappa statistic (or value) is a metric that compares an Observed Accuracy with an Expected Accuracy (random chance). The kappa statistic is used not only to evaluate a single classifier, but also to evaluate classifiers amongst themselves.

What is the difference between ICC and Kappa?

Though both measure inter-rater agreement (reliability of measurements), Kappa agreement test is used for categorical variables, while ICC is used for continuous quantitative variables.

What is weighted kappa?

Cohen’s weighted kappa is broadly used in cross-classification as a measure of agreement between observed raters. It is an appropriate index of agreement when ratings are nominal scales with no order structure.

Inter rater reliability using Fleiss Kappa

Images related to the topicInter rater reliability using Fleiss Kappa

Can you use Cohen’s kappa for 3 raters?

The original formula for Cohen’s kappa does not allow to calculate inter-rater reliability for more than two raters.

What is Kappa statistics in accuracy assessment?

Another accuracy indicator is the kappa coefficient. It is a measure of how the classification results compare to values assigned by chance. It can take values from 0 to 1. If kappa coefficient equals to 0, there is no agreement between the classified image and the reference image.

What is Kappa quality?

Kappa is the ratio of the proportion of times the raters agree (adjusted for agreement by chance) to the maximum proportion of times the raters could have agreed (adjusted for agreement by chance).

Is a higher Kappa good?

“Cohen suggested the Kappa result be interpreted as follows: values ≤ 0 as indicating no agreement and 0.01–0.20 as none to slight, 0.21–0.40 as fair, 0.41– 0.60 as moderate, 0.61–0.80 as substantial, and 0.81–1.00 as almost perfect agreement.”

What is a good percentage for inter-rater reliability?

Inter-rater reliability was deemed “acceptable” if the IRR score was ≥75%, following a rule of thumb for acceptable reliability [19]. IRR scores between 50% and < 75% were considered to be moderately acceptable and those < 50% were considered to be unacceptable in this analysis.

What is inter scorer reliability?

Inter-rater reliability is the extent to which two or more raters (or observers, coders, examiners) agree. It addresses the issue of consistency of the implementation of a rating system. Inter-rater reliability can be evaluated by using a number of different statistics.

How do you test for inter rater reliability in SPSS?

Specify Analyze>Scale>Reliability Analysis. Specify the raters as the variables, click on Statistics, check the box for Intraclass correlation coefficient, choose the desired model, click Continue, then OK.

How does SPSS calculate weighted kappa?

SPSS does not have an option to calculate a weighted kappa. To do so in SPSS you need to create a variable with the desired weights in and then select Data followed by Weight Cases….

How do you do kappa statistics in SPSS?

- Click Analyze > Descriptive Statistics > Crosstabs… …

- You need to transfer one variable (e.g., Officer1) into the Row(s): box, and the second variable (e.g., Officer2) into the Column(s): box. …

- Click on the button. …

- Select the Kappa checkbox. …

- Click on the. …

- Click on the button.

What is Kappa inter-rater reliability?

Cohen’s kappa statistic measures interrater reliability (sometimes called interobserver agreement). Interrater reliability, or precision, happens when your data raters (or collectors) give the same score to the same data item.

Kappa Value Calculation | Reliability

Images related to the topicKappa Value Calculation | Reliability

What is inter-rater reliability example?

Interrater reliability is the most easily understood form of reliability, because everybody has encountered it. For example, watching any sport using judges, such as Olympics ice skating or a dog show, relies upon human observers maintaining a great degree of consistency between observers.

What is inter annotator agreement?

Inter-annotator agreement is a measure of how well two (or more) annotators can make the same annotation decision for a certain category.

Related searches to What is Fleiss kappa used for?

- fleiss kappa in excel

- fleiss’ kappa python

- fleiss kappa python

- cohens kappa vs fleiss kappa

- fleiss kappa spss

- cohen’s kappa vs fleiss’ kappa

- weighted fleiss’ kappa

- fleiss kappa pdf

- lights kappa

- what is fleiss kappa used for in r

- what is fleiss kappa used for in python

- fleiss’ kappa pdf

- weighted fleiss kappa

- fleiss’ kappa in excel

- cohens kappa calculator

Information related to the topic What is Fleiss kappa used for?

Here are the search results of the thread What is Fleiss kappa used for? from Bing. You can read more if you want.

You have just come across an article on the topic What is Fleiss kappa used for?. If you found this article useful, please share it. Thank you very much.